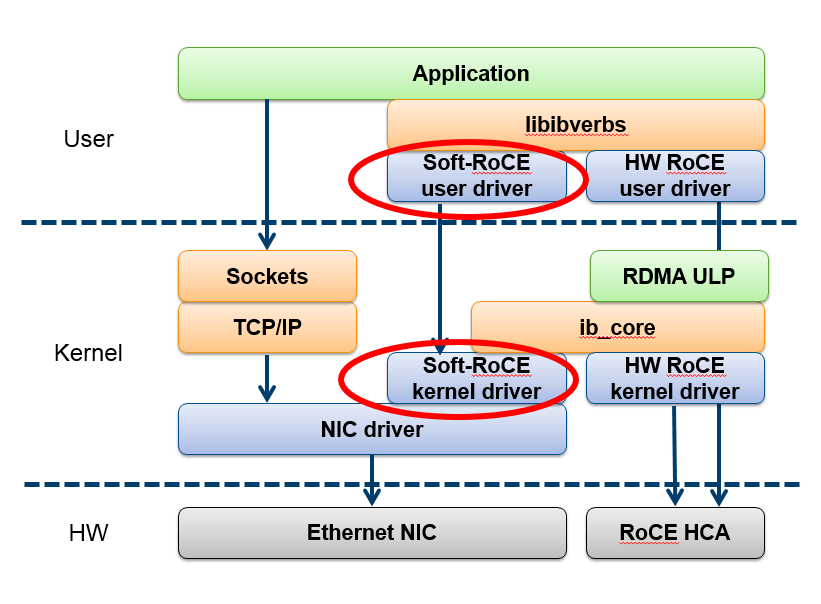

使用 Soft-RoCE 可以在任何以太网接口上模拟 RoCE 网卡功能,当不具备拥有 Mellanox 等支持 RoCE 功能的物理网卡条件时,可以使用内核的 Soft-RoCE 模块在普通以太网借口上进行模拟。

Soft-RoCE 架构

其中 Soft-RoCE 内核驱动模块完成了 RoCE 网络层(UDP/IP)处理:

Soft-RoCE 初体验

首先需要加载 Soft-RoCE 内核驱动模块(rdma_rxe.ko):

$ sudo modprobe rdma_rxe

$ modinfo rdma_rxe

filename: /lib/modules/5.4.0-64-generic/kernel/drivers/infiniband/sw/rxe/rdma_rxe.ko

alias: rdma-link-rxe

license: Dual BSD/GPL

description: Soft RDMA transport

srcversion: 12ABDA933B29E9F5A5ACBEA

depends: ib_core,ip6_udp_tunnel,udp_tunnel,ib_uverbs

基于以太网接口创建 RoCE 设备(这里的命令示例执行环境都是 Ubuntu 20.04,CentOS 7 应该使用 libibverbs 包中的 rxe_cfg 命令,CentOS 8 可以直接使用 rdma 命令):

$ sudo rdma link add r1 type rxe netdev enp2s0

$ rdma link

link rocep2s0/1 state ACTIVE physical_state LINK_UP netdev enp2s0

$ rdma dev

0: rocep2s0: node_type ca node_guid de4a:3eff:fe49:2ce7 sys_image_guid de4a:3eff:fe49:2ce7

$ rdma resource

0: rocep2s0: pd 1 cq 1 qp 1 cm_id 0 mr 0 ctx 0

注意,在 Ubuntu 20.04 下命令行中的设备名 r1 对非环回设备(lo)无效,会自动生成类似 rocep2s0 的设备名,但 CentOS 8 没有这个问题。

查看生成的 RoCE 设备信息:

$ ibv_devices

device node GUID

------ ----------------

rocep2s0 de4a3efffe492ce7

$ ibv_devinfo

hca_id: rocep2s0

transport: InfiniBand (0)

fw_ver: 0.0.0

node_guid: de4a:3eff:fe49:2ce7

sys_image_guid: de4a:3eff:fe49:2ce7

vendor_id: 0x0000

vendor_part_id: 0

hw_ver: 0x0

phys_port_cnt: 1

port: 1

state: PORT_ACTIVE (4)

max_mtu: 4096 (5)

active_mtu: 1024 (3)

sm_lid: 0

port_lid: 0

port_lmc: 0x00

link_layer: Ethernet

$ ibstat

CA 'rocep2s0'

CA type:

Number of ports: 1

Firmware version:

Hardware version:

Node GUID: 0xde4a3efffe492ce7

System image GUID: 0xde4a3efffe492ce7

Port 1:

State: Active

Physical state: LinkUp

Rate: 2.5

Base lid: 0

LMC: 0

SM lid: 0

Capability mask: 0x00810000

Port GUID: 0xde4a3efffe492ce7

Link layer: Ethernet

删除 RoCE 设备:

$ sudo rdma link del rocep2s0

rping 测试

服务端:

$ rping -a 192.168.137.3 -s -P -d

created cm_id 0x55b13369c390

rdma_bind_addr successful

rdma_listen

cma_event type RDMA_CM_EVENT_CONNECT_REQUEST cma_id 0x7f3a04000cd0 (child)

child cma 0x7f3a04000cd0

created pd 0x7f39fc000b60

created channel 0x7f39fc000b80

created cq 0x7f39fc000ba0

created qp 0x7f39fc000c50

rping_setup_buffers called on cb 0x55b13369cda0

allocated & registered buffers...

accepting client connection request

cq_thread started.

cma_event type RDMA_CM_EVENT_ESTABLISHED cma_id 0x7f3a04000cd0 (child)

ESTABLISHED

recv completion

Received rkey 2608 addr 5560c0cb3d50 len 64 from peer

server received sink adv

server posted rdma read req

rdma read completion

server received read complete

send completion

server posted go ahead

recv completion

Received rkey 2504 addr 5560c0cb0380 len 64 from peer

server received sink adv

rdma write from lkey 2945 laddr 7f39fc000dd0 len 64

rdma write completion

server rdma write complete

send completion

server posted go ahead

recv completion

Received rkey 2608 addr 5560c0cb3d50 len 64 from peer

server received sink adv

server posted rdma read req

rdma read completion

server received read complete

server posted go ahead

send completion

...

cma_event type RDMA_CM_EVENT_DISCONNECTED cma_id 0x7f3a04000cd0 (child)

server DISCONNECT EVENT...

wait for RDMA_READ_ADV state 10

rping_free_buffers called on cb 0x55b13369cda0

客户端:

$ rping -a 192.168.137.3 -c -d

created cm_id 0x5560c0cb3390

cma_event type RDMA_CM_EVENT_ADDR_RESOLVED cma_id 0x5560c0cb3390 (parent)

cma_event type RDMA_CM_EVENT_ROUTE_RESOLVED cma_id 0x5560c0cb3390 (parent)

rdma_resolve_addr - rdma_resolve_route successful

created pd 0x5560c0cab170

created channel 0x5560c0cab1b0

created cq 0x5560c0cb3b20

created qp 0x5560c0cb3bd0

rping_setup_buffers called on cb 0x5560c0ca8810

allocated & registered buffers...

cq_thread started.

cma_event type RDMA_CM_EVENT_ESTABLISHED cma_id 0x5560c0cb3390 (parent)

ESTABLISHED

rmda_connect successful

RDMA addr 5560c0cb3d50 rkey 2608 len 64

send completion

recv completion

RDMA addr 5560c0cb0380 rkey 2504 len 64

send completion

recv completion

RDMA addr 5560c0cb3d50 rkey 2608 len 64

send completion

recv completion

RDMA addr 5560c0cb0380 rkey 2504 len 64

send completion

recv completion

RDMA addr 5560c0cb3d50 rkey 2608 len 64

send completion

recv completion

代码实现

Soft-RoCE 驱动代码在 Linux 源代码树如下目录:

linux/drivers/infiniband/sw/rxe/

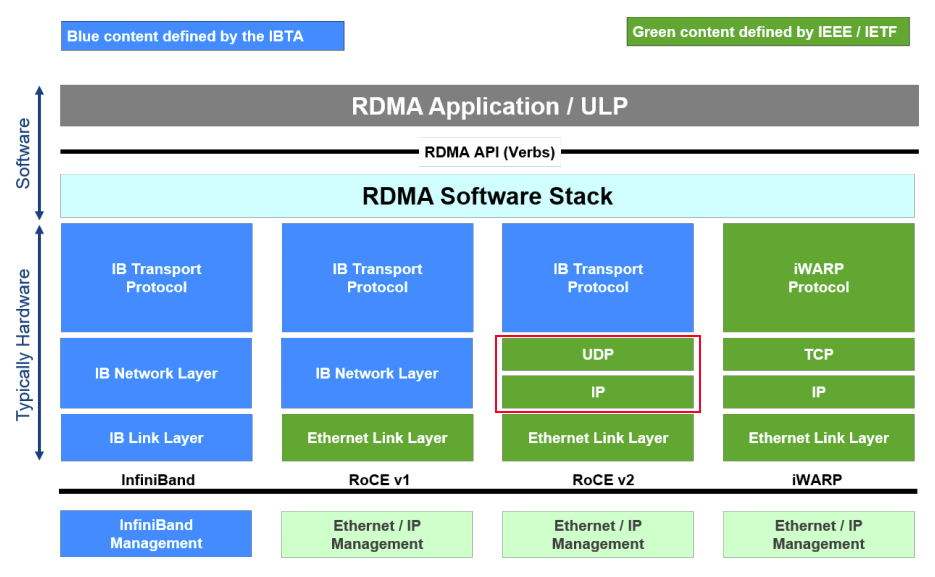

RoCE 网络层(UDP/IP)与内核网络栈之间的代码逻辑相对来说比较简单,使用内核的 UDP tunneling 框架实现 UDP 4791 端口报文接收(参考 rxe_net.c/rxe_setup_udp_tunnel),直接调用 IP 层接口实现 UDP 报文发送(参考 rxe_net.c/send)。

参考资料

RDMA over Converged Ethernet

https://en.wikipedia.org/wiki/RDMA_over_Converged_Ethernet

SOFT-ROCE RDMA TRANSPORT IN A SOFTWARE IMPLEMENTATION

https://www.roceinitiative.org/wp-content/uploads/2016/11/SoftRoCE_Paper_FINAL.pdf

HowTo Configure Soft-RoCE

https://community.mellanox.com/s/article/howto-configure-soft-roce

rxe

https://man7.org/linux/man-pages/man7/rxe.7.html

rdma-link - rdma link configuration

http://manpages.ubuntu.com/manpages/focal/man8/rdma-link.8.html

Kernel UDP tunneling framework

https://www.programmersought.com/article/299627450/

An introduction to Linux virtual interfaces: Tunnels

https://developers.redhat.com/blog/2019/05/17/an-introduction-to-linux-virtual-interfaces-tunnels/

最后修改于 2021-02-21