Boost.Pool 默认实现了两种 allocator,即 pool_allocator 和 fast_pool_allocator,在测试时发现前者在容器 clear 性能巨差无比,因此仔细阅读了一下内部的代码实现,问题出在保序释放时调用的 find_prev 接口:

// boost::simple_segregated_storage::find_prev

template <typename SizeType>

void * simple_segregated_storage<SizeType>::find_prev(void * const ptr)

{

// Handle border case.

if (first == 0 || std::greater<void *>()(first, ptr))

return 0;

void * iter = first;

while (true)

{

// if we're about to hit the end, or if we've found where "ptr" goes.

if (nextof(iter) == 0 || std::greater<void *>()(nextof(iter), ptr))

return iter;

iter = nextof(iter);

}

}

这是一个线性查找的过程,性能可想而知。

pool_allocator 虽然 free 操作效率感人,但相比 fast_pool_allocator 最大的优势是当容器进行 clear 之后,pool::release_memory 能释放内存,而 fast_pool_allocator 由于 insert 与 erase 顺序的可能不一致(以 std::map 容器为例),导致遍历 chunk 时无法释放所属的 block(注意下面 for (char * i = ptr.begin(); i != ptr.end(); i += partition_size) 循环的处理):

template <typename UserAllocator>

bool pool<UserAllocator>::release_memory()

{ //! pool must be ordered. Frees every memory block that doesn't have any allocated chunks.

//! \returns true if at least one memory block was freed.

// ret is the return value: it will be set to true when we actually call

// UserAllocator::free(..)

bool ret = false;

// This is a current & previous iterator pair over the memory block list

details::PODptr<size_type> ptr = list;

details::PODptr<size_type> prev;

// This is a current & previous iterator pair over the free memory chunk list

// Note that "prev_free" in this case does NOT point to the previous memory

// chunk in the free list, but rather the last free memory chunk before the

// current block.

void * free_p = this->first;

void * prev_free_p = 0;

const size_type partition_size = alloc_size();

// Search through all the all the allocated memory blocks

while (ptr.valid())

{

// At this point:

// ptr points to a valid memory block

// free_p points to either:

// 0 if there are no more free chunks

// the first free chunk in this or some next memory block

// prev_free_p points to either:

// the last free chunk in some previous memory block

// 0 if there is no such free chunk

// prev is either:

// the PODptr whose next() is ptr

// !valid() if there is no such PODptr

// If there are no more free memory chunks, then every remaining

// block is allocated out to its fullest capacity, and we can't

// release any more memory

if (free_p == 0)

break;

// We have to check all the chunks. If they are *all* free (i.e., present

// in the free list), then we can free the block.

bool all_chunks_free = true;

// Iterate 'i' through all chunks in the memory block

// if free starts in the memory block, be careful to keep it there

void * saved_free = free_p;

for (char * i = ptr.begin(); i != ptr.end(); i += partition_size)

{

// If this chunk is not free

if (i != free_p)

{

// We won't be able to free this block

all_chunks_free = false;

// free_p might have travelled outside ptr

free_p = saved_free;

// Abort searching the chunks; we won't be able to free this

// block because a chunk is not free.

break;

}

// We do not increment prev_free_p because we are in the same block

free_p = nextof(free_p);

}

// post: if the memory block has any chunks, free_p points to one of them

// otherwise, our assertions above are still valid

const details::PODptr<size_type> next = ptr.next();

if (!all_chunks_free)

{

if (is_from(free_p, ptr.begin(), ptr.element_size()))

{

std::less<void *> lt;

void * const end = ptr.end();

do

{

prev_free_p = free_p;

free_p = nextof(free_p);

} while (free_p && lt(free_p, end));

}

// This invariant is now restored:

// free_p points to the first free chunk in some next memory block, or

// 0 if there is no such chunk.

// prev_free_p points to the last free chunk in this memory block.

// We are just about to advance ptr. Maintain the invariant:

// prev is the PODptr whose next() is ptr, or !valid()

// if there is no such PODptr

prev = ptr;

}

else

{

// All chunks from this block are free

// Remove block from list

if (prev.valid())

prev.next(next);

else

list = next;

// Remove all entries in the free list from this block

if (prev_free_p != 0)

nextof(prev_free_p) = free_p;

else

this->first = free_p;

// And release memory

(UserAllocator::free)(ptr.begin());

ret = true;

}

// Increment ptr

ptr = next;

}

next_size = start_size;

return ret;

}

如果容器在使用完之后会 clear,且内存池由该容器独占使用,那直接使用 purge_memory 释放所有内存 block 是比较靠谱的方法。

template <typename UserAllocator>

bool pool<UserAllocator>::purge_memory()

{ //! pool must be ordered.

//! Frees every memory block.

//!

//! This function invalidates any pointers previously returned

//! by allocation functions of t.

//! \returns true if at least one memory block was freed.

details::PODptr<size_type> iter = list;

if (!iter.valid())

return false;

do

{

// hold "next" pointer

const details::PODptr<size_type> next = iter.next();

// delete the storage

(UserAllocator::free)(iter.begin());

// increment iter

iter = next;

} while (iter.valid());

list.invalidate();

this->first = 0;

next_size = start_size;

return true;

}

注意:

std::map, std::list 之类的容器除用户数据本身之外,还需要相应的关联指针等内部数据,因此在构造底层的 singleton_pool 内存池实例时,会存在两个内存池实例,一个是原始的 pool_allocator 或 fast_pool_allocator 构造时创建的,还一个容器构造内部节点时 rebind 得到的。原始的内存池并没有用来分配内存,因此需要先声明一个内部 rebind 的内存池类型,然后才能使用该类型调用 release_memory 或 purge_memory 静态方法。

相关问题

下面是 stackoverflow 上类似的一个问题,由于年代太久远,图片已经失效了,因此我抢救性保存一下。

Q

I’m using boost.pool, but I don’t know when to use boost::pool<>::malloc and boost::pool<>::ordered_malloc?

so,

- what’s the difference of

boost::pool<>::mallocandboost::pool<>::ordered_malloc? - when should I use

boost::pool<>::ordered_malloc?

A

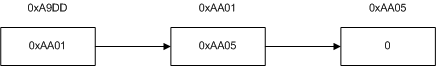

First, we should know the basic idea behind the Boost Pool library: simple_segregated_storage, it is similar to a singly linked list, and responsible for partitioning a memory block into fixed-size chunks:

A memory pool keeps a free list of memory chunks. So we mentioned blocks and chunks: the memory pool uses new or malloc to allocate a memory block and divides it into many memory chunks which have same size.

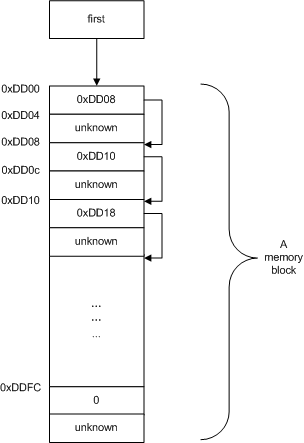

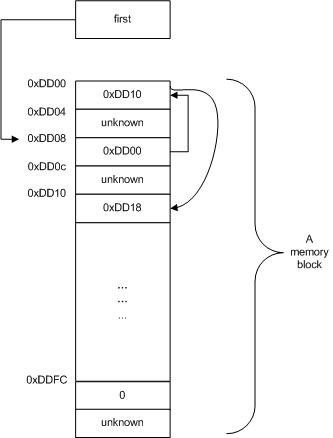

Assume the address is aligned by 8, 4 bytes for storing the address of next chunk, so a memory block(8 bytes * 32 chunks) is as below(the memory address is just for illustrating the question, not a real one):

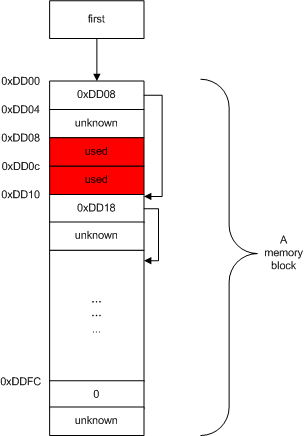

Now, suppose the user allocates 8 bytes memory twice, so the chunks: [0xDD00,0xDD08), [0xDD08,0xDD10) are used. After a while, the user releases the memory at [0xDD00,0xDD08), so this chunk will go back to the free list. Now the block is like this:

Afterwards the user releases the memory at [0xDD08,0xDD10), the simplest way to place this chunk back in the list is to update the first to point to it, constant time complexity. the simple_segregated_storage<T>::free() is doing this exactly:

void free BOOST_PREVENT_MACRO_SUBSTITUTION(void * const chunk)

{ //! Free a chunk.

//! \pre chunk was previously returned from a malloc() referring to the same free list.

//! \post !empty()

BOOST_POOL_VALIDATE_INTERNALS

nextof(chunk) = first;

first = chunk;

BOOST_POOL_VALIDATE_INTERNALS

}

After that, the list would be like this:

Now we noticed the list of chunks are not ordered by their address after these operations! If we want to preserve the order while de-allocating, call pool<>::ordered_free() instead of pool<>::free() to places the memory back in the list in its proper order. Now we’ve known what’s the order in the memory pool, let’s dig into the source code of boost::pool<>::malloc and boost::pool<>::ordered_malloc:

void * malloc BOOST_PREVENT_MACRO_SUBSTITUTION()

{

if (!store().empty())

return (store().malloc)();

return malloc_need_resize();

}

void * ordered_malloc()

{

if (!store().empty())

return (store().malloc)();

return ordered_malloc_need_resize();

}

As we can see, they differ only when there is no free chunk in the list of memory blocks. In this scenario, it allocates a new memory block, merges its free list to pool’s free list, the difference between these two methods is that boost::pool<>::ordered_malloc preserves order while merging the free lists.

Above is for question 1.

So, why does the order matter?! It seems the memory pool works perfectly with the unordered chunks!

First, if we want to find a contiguous sequence of n chunks, the ordered free list would make it easier. Second, Let’s have a look at the derived class of boost::pool: boost::object_pool, it provides automatic destruction of non-deallocated objects on destruction of the object_pool object while you can also destroy the object manually, for example:

class X { … };

void func()

{

boost::object_pool<X> alloc;

X* obj1 = alloc.construct();

X* obj2 = alloc.construct();

alloc.destroy(obj2);

}

the code above is OK, no memory leak or double delete! How does boost::object_pool do this magic? Let’s find the implementation of destructor of boost::object_pool(I have boost 1.48 on my machine):

template <typename T, typename UserAllocator>

object_pool<T, UserAllocator>::~object_pool()

{

#ifndef BOOST_POOL_VALGRIND

// handle trivial case of invalid list.

if (!this->list.valid())

return;

details::PODptr<size_type> iter = this->list;

details::PODptr<size_type> next = iter;

// Start 'freed_iter' at beginning of free list

void * freed_iter = this->first;

const size_type partition_size = this->alloc_size();

do

{

// increment next

next = next.next();

// delete all contained objects that aren't freed.

// Iterate 'i' through all chunks in the memory block.

for (char * i = iter.begin(); i != iter.end(); i += partition_size)

{

// If this chunk is free,

if (i == freed_iter)

{

// Increment freed_iter to point to next in free list.

freed_iter = nextof(freed_iter);

// Continue searching chunks in the memory block.

continue;

}

// This chunk is not free (allocated), so call its destructor,

static_cast<T *>(static_cast<void *>(i))->~T();

// and continue searching chunks in the memory block.

}

// free storage.

(UserAllocator::free)(iter.begin());

// increment iter.

iter = next;

} while (iter.valid());

// Make the block list empty so that the inherited destructor doesn't try to

// free it again.

this->list.invalidate();

#else

// destruct all used elements:

for(std::set<void*>::iterator pos = this->used_list.begin(); pos != this->used_list.end(); ++pos)

{

static_cast<T*>(*pos)->~T();

}

// base class will actually free the memory...

#endif

}

it goes through all the chunks in the list of memory blocks(list, the data member of boost::pool<>, holds the locations and sizes of all memory blocks allocated from the system) to find whether any chunk in it also shows in the free list, if not, calls the destructor of the object, then free the memory. So it’s kind of getting the intersection of two sets, just as std::set_intersection() does! If the list is sorted, it would be much faster to do that. Actually in the boost::object_pool<>, the order is required, the public member functions: boost::object_pool<>::malloc() and boost::object_pool<>::free() just call the boost::pool<>::ordered_malloc() and boost::pool<>::ordered_free() respectively:

element_type * malloc BOOST_PREVENT_MACRO_SUBSTITUTION()

{ //! Allocates memory that can hold one object of type ElementType.

//!

//! If out of memory, returns 0.

//!

//! Amortized O(1).

return static_cast<element_type *>(store().ordered_malloc());

}

void free BOOST_PREVENT_MACRO_SUBSTITUTION(element_type * const chunk)

{ //! De-Allocates memory that holds a chunk of type ElementType.

//!

//! Note that p may not be 0.\n

//!

//! Note that the destructor for p is not called. O(N).

store().ordered_free(chunk);

}

So for the queston 2: You needn’t use boost::pool<>::ordered_malloc in most situations.

参考资料

what’s the difference between boost::pool<>::malloc and boost::pool<>::ordered_malloc, and when should I use boost::pool<>::ordered_malloc?

Is Boost Pool free efficiency O(n) or O(1)

https://stackoverflow.com/questions/5288375/is-boost-pool-free-efficiency-on-or-o1

Is there some way to use boost::obect_pool with faster free operations

最后修改于 2019-08-26